Introduction

I like webrings and quite a while created a list of all the webrings I could find. Towards the end of each month I update the list by vsiting all the rings to make sure they are still available and get the number of sites in it. As there are now over 300 webrings, I spend most of the day doing this.

It struck me that perhaps I should try and automate the task I set myself and started thinking about web scrapers.

Availability

Almost all programming languages now have a scraper made in them. For example, a serch on MetaCPAN for Perl for "scraper" returns 144 results of various kinds of web scraper. There are several created in Python, several for Node.js and so on.

Legality and robots.txt

Despite how some people feel about web scraping it is usually perfectly legal. There are problems if you try and read private databases, use the scraper to log into sites and so on. I do not plan on doing anything like that.

A site's robot.txt file should be respected. I checked nearly all the webring sites' robots.txt files and most did not have one and the ones that did were fairly lenient towards bots.

I should mention what I feel about bots and scrapers in general. Now and them I run across sites that just seem to be collections of material scraped from various other sites. Some are very useful, it is how some of the air flight sites are put together, others just seem a waste of time and of no value to me.

Bots of one sort another now make up about half of the traffic on the internet. I think that is a pity, at the same time I feel that using one to visit the 300+ sites once a month isn't adding much to the "problem."

Using PowerShell

All I wanted to do was go to the members list of the various webrings and simply count how many there were. PowerShell has two cmdlets, Invoke-RestMethod and Invoke-WebRequest. The Invoke-RestMethod cmdlet works for most sites and for those where the data cannot be retrieved using that method, I use the Invoke-WebRequest cmdlet.

The tutorials I found deal mostly with sites that are nicely written with ordered data. The sites I want to look at have a wide variety of writing and ordering styles of what I want to find. This means that the automated commands in the cmdlets are difficult to use, so I just use them to get the pages as text and search that for what they have using regex (regular expressions).

The script is very simple, just seven functions for manipulating the returned text, creating the CSV file for holding the results and then a long list of each of the webrings, their URL, and what to search the page text for.

As each of the pages are created differently I needed the seven functions to:

- To count the number of lines in a file

- To search the text from a certain point to the end of the file

- To say that I need to manually check the files as I couldn't programmatically extract the information I wanted

- To get the number of sites where it is actually written on the page

- To search the text from the mid secion of the text

- To search the text from the beginning to some point in the page

- To search the entire page text

When the information is passed from the webring list to the functions, the function parameters include information about which part of the text to search, as well as what to search for.

Some of the functions contain items not to be counted in the search, these are usually code that has been commentated out of the page text, or a single text file that just contains a list of sites with some sort of coding to designate which are not in a particular ring.

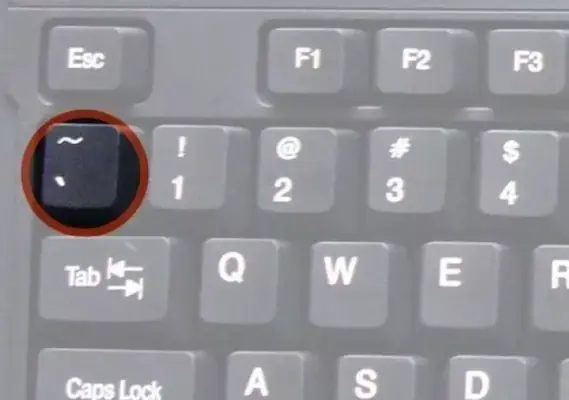

When I was writing the script I used double quotes for the variables, I didn't realise that many of them would themselves contain double quotes. This can be gotten around by preceeding the double quotes in the variables with a backtick or grave accent (`), which is the character on the tilde key (~) which is at the top left on most keyboards.

The Script

function countLines {

param ($ringName, $htmlContent)

$members = $htmlContent | Measure-Object -Line | Select-object -expandproperty Lines

$ignore = " -"

$ignoreLines = ([regex]::Matches($htmlContent, $ignore)).count

$members = $members - $ignoreLines

write-host "$ringName, $members"

"$ringName,$members" | out-file "$PSScriptRoot\webring-members.csv" -append

}

function endContent {

param ($ringName, $htmlContent, $findTxt1, $findTxt2)

$startPosn = $htmlContent.IndexOf($findTxt1)

$htmlContent = $htmlContent.substring($startPosn)

$members = ([regex]::Matches($htmlContent, $findTxt2)).count

write-host "$ringName, $members"

"$ringName,$members" | out-file "$PSScriptRoot\webring-members.csv" -append

}

function manualChk {

param ($ringName)

$members = "Need to check manually"

write-host "$ringName, $members"

"$ringName,$members" | out-file "$PSScriptRoot\webring-members.csv" -append

}

function memberTxt {

param ($ringName, $htmlContent, $findTxt, $offsetTxt, $strLen)

$posn = $htmlContent.IndexOf($findTxt)

$members = [int]$htmlContent.substring($posn+$offsetTxt, $strLen)

write-host "$ringName, $members"

"$ringName,$members" | out-file "$PSScriptRoot\webring-members.csv" -append

}

function midContent {

param ($ringName, $htmlContent, $findTxt1, $findTxt2, $findTxt3)

$startPosn = $htmlContent.IndexOf($findTxt1)

$endPosn = $htmlContent.IndexOf($findTxt2)

$endPosn = $endPosn - $startPosn

$htmlContent = $htmlContent.substring($startPosn, $endPosn)

$members = ([regex]::Matches($htmlContent, $findTxt3)).count

$ignore = "//'http"

$ignoreLines = ([regex]::Matches($htmlContent, $ignore)).count

$members = $members - $ignoreLines

$ignore = "<!-- <p><a href="

$ignoreLines = ([regex]::Matches($htmlContent, $ignore)).count

$members = $members - $ignoreLines

$ignore = "<!--<p><a href="

$ignoreLines = ([regex]::Matches($htmlContent, $ignore)).count

$members = $members - $ignoreLines

write-host "$ringName, $members"

"$ringName,$members" | out-file "$PSScriptRoot\webring-members.csv" -append

}

function startContent {

param ($ringName, $htmlContent, $findTxt1, $findTxt2)

$startPosn = 1

$endPosn = $htmlContent.IndexOf($findTxt1)

$htmlContent = $htmlContent.substring(1, $endPosn)

$members = ([regex]::Matches($htmlContent, $findTxt2)).count

$ignore = "//'http"

$ignoreLines = ([regex]::Matches($htmlContent, $ignore)).count

$members = $members - $ignoreLines

$ignore = "// 'http"

$ignoreLines = ([regex]::Matches($htmlContent, $ignore)).count

$members = $members - $ignoreLines

$ignore = "example.com"

$ignoreLines = ([regex]::Matches($htmlContent, $ignore)).count

$members = $members - $ignoreLines

write-host "$ringName, $members"

"$ringName,$members" | out-file "$PSScriptRoot\webring-members.csv" -append

}

function textCount {

param ($ringName, $htmlContent, $findTxt)

$members = ([regex]::Matches($htmlContent, $findTxt)).count

write-host "$ringName, $members"

"$ringName,$members" | out-file "$PSScriptRoot\webring-members.csv" -append

}

if (Test-Path -path "$PSScriptRoot\webring-members.csv") { Remove-Item -path "$PSScriptRoot\webring-members.csv" | out-null}

New-Item -path "$PSScriptRoot\" -Name "webring-members.csv" -ItemType "file" -Value "Ring Name,Members`n" | out-null

$ringName = "webring-1"

$htmlContent = Invoke-RestMethod "url-to-webring-1"

countLines $ringName $htmlContent

$ringName = "webring-2"

$htmlContent = Invoke-RestMethod "url-to-webring-2"

$findTxt1 = "These are the current sites listed"

$findTxt2 = "<td><center><b> <a"

endContent $ringName $htmlContent $findTxt1 $findTxt2

$ringName = "webring-3"

$htmlContent = Invoke-RestMethod "url-to-webring-3"

manualChk $ringName

$ringName = "webring-4"

$htmlContent = Invoke-RestMethod "url-to-webring-4"

$findTxt = "<h2>Members ("

$offsetTxt = 13

$strLen = 1

memberTxt $ringName $htmlContent $findTxt $offsetTxt $strLen

$ringName = "webring-5"

$htmlContent = Invoke-RestMethod "url-to-webring-5"

$findTxt1 = "//the full URLs of all the sites in the ring"

$findTxt2 = "var siteswwws"

$findTxt3 = "https://"

midContent $ringName $htmlContent $findTxt1 $findTxt2 $findTxt3

$ringName = "webring-6"

$htmlContent = Invoke-RestMethod "url-to-webring-5"

$findTxt1 = "//the name of the ring"

$findTxt2 = "'https://"

startContent $ringName $htmlContent $findTxt1 $findTxt2

$ringName = "webring-7"

$htmlContent = Invoke-RestMethod "url-to-webring-7"

$findTxt = "id=`"site_"

textCount $ringName $htmlContent $findTxtResults

The finished script length is 1867 lines and checks 325 webring sites. To measure how long the script takes to run I used:

$startTime = Get-Date

script body

$endTime = Get-Date

$runTime = New-TimeSpan -Start $startTime -End $endTime

write-host $runtimeThis gave the following results:

1 minute, 31 seconds

1 minute, 47 seconds

2 minutes, 3 seconds

2 minutes 23 seconds

2 minutes, 31 seconds

I think the wide variation in times is due to my internet speed more than anything else.

Improvements

I suppose the script could have been better written and optimized. It really should check for server timeouts and read the robots.txt files every time it is run.

PowerShell sends error messages to the screen, I need to capture these and write them to a file. This will help identify rings that are currntly inaccessible and help keep the list I keep accurate. This would be PowerShell's Stream 2, Write-Error.

Writing to the output file can be made faster. See Powershell and writing files, and Speed of loops and different ways of writing to files.

I could save a little bit of time the script takes to run by not writing the output to the screen and by writing the resuts to an array or arraylist and then writing that to the output file in one go, a little bit more by using PowerShell's Export_Csv, and a lot more by using StreamWriter.

The script seems to have have a problem counting the text strings from JSON files accurately, especially those hosted on Github. I need to look at this and fix it.

Some sites keep their own record of sites in their ring and write that on their site. I've found that some are not entirely accurate so need to find the actual list, if they have one, and get the information from that. The discrepancy in at least some cases appears to be that when the number of sites in a ring changes, the ring owner forgets to update the number of sites in their ring.

Using an ArrayList and Outputting the File at the End of the Script

To the top of the script I added:

$ringList = [System.Collections.ArrayList]::new()

To the top of the script, and:

$ringList | out-file "$PSScriptRoot\webring-members.csv"

To the bottom. In each of the functions I also changed the line:

"$ringName,$members" | out-file "$PSScriptRoot\webring-members.csv" -append

To:

$ringList.add("$ringName, $members") > $null

Without the > $null, the script ouputs the ArrayList length for each addition to it. The timing for the running of the script are now all under 2 minutes:

1 minute, 45 seconds

1 minute, 46 seconds

1 minute, 46 seconds

1 minute, 47 seconds

1 minute, 48 seconds

The script is now:

$ringList = [System.Collections.ArrayList]::new()

function outputWrite {

param ($ringName, $members)

write-host "$ringName, $members"

$ringList.add("$ringName, $members") > $null

}

function countLines {

param ($ringName, $htmlContent)

$members = $htmlContent | Measure-Object -Line | Select-object -expandproperty Lines

$ignore = " -"

$ignoreLines = ([regex]::Matches($htmlContent, $ignore)).count

$members = $members - $ignoreLines

outputWrite $ringName $members

}

function endContent {

param ($ringName, $htmlContent, $findTxt1, $findTxt2)

$startPosn = $htmlContent.IndexOf($findTxt1)

$htmlContent = $htmlContent.substring($startPosn)

$members = ([regex]::Matches($htmlContent, $findTxt2)).count

outputWrite $ringName $members

}

function manualChk {

param ($ringName)

$members = "Need to check manually"

outputWrite $ringName $members

}

function memberTxt {

param ($ringName, $htmlContent, $findTxt, $offsetTxt, $strLen)

$posn = $htmlContent.IndexOf($findTxt)

$members = [int]$htmlContent.substring($posn+$offsetTxt, $strLen)

outputWrite $ringName $members

}

function midContent {

param ($ringName, $htmlContent, $findTxt1, $findTxt2, $findTxt3)

$startPosn = $htmlContent.IndexOf($findTxt1)

$endPosn = $htmlContent.IndexOf($findTxt2)

$endPosn = $endPosn - $startPosn

$htmlContent = $htmlContent.substring($startPosn, $endPosn)

$members = ([regex]::Matches($htmlContent, $findTxt3)).count

$ignore = "//'http"

$ignoreLines = ([regex]::Matches($htmlContent, $ignore)).count

$members = $members - $ignoreLines

$ignore = "<!-- <p><a href="

$ignoreLines = ([regex]::Matches($htmlContent, $ignore)).count

$members = $members - $ignoreLines

$ignore = "<!--<p><a href="

$ignoreLines = ([regex]::Matches($htmlContent, $ignore)).count

$members = $members - $ignoreLines

outputWrite $ringName $members

}

function startContent {

param ($ringName, $htmlContent, $findTxt1, $findTxt2)

$startPosn = 1

$endPosn = $htmlContent.IndexOf($findTxt1)

$htmlContent = $htmlContent.substring(1, $endPosn)

$members = ([regex]::Matches($htmlContent, $findTxt2)).count

$ignore = "//'http"

$ignoreLines = ([regex]::Matches($htmlContent, $ignore)).count

$members = $members - $ignoreLines

$ignore = "// 'http"

$ignoreLines = ([regex]::Matches($htmlContent, $ignore)).count

$members = $members - $ignoreLines

$ignore = "example.com"

$ignoreLines = ([regex]::Matches($htmlContent, $ignore)).count

$members = $members - $ignoreLines

outputWrite $ringName $members

}

function textCount {

param ($ringName, $htmlContent, $findTxt)

$members = ([regex]::Matches($htmlContent, $findTxt)).count

outputWrite $ringName $members

}

if (Test-Path -path "$PSScriptRoot\webring-members.csv") { Remove-Item -path "$PSScriptRoot\webring-members.csv" | out-null}

New-Item -path "$PSScriptRoot\" -Name "webring-members.csv" -ItemType "file" -Value "Ring Name,Members`n" | out-null

$ringName = "webring-1"

$htmlContent = Invoke-RestMethod "url-to-webring-1"

countLines $ringName $htmlContent

$ringName = "webring-2"

$htmlContent = Invoke-RestMethod "url-to-webring-2"

$findTxt1 = "These are the current sites listed"

$findTxt2 = "<td><center><b> <a"

endContent $ringName $htmlContent $findTxt1 $findTxt2

$ringName = "webring-3"

$htmlContent = Invoke-RestMethod "url-to-webring-3"

manualChk $ringName

$ringName = "webring-4"

$htmlContent = Invoke-RestMethod "url-to-webring-4"

$findTxt = "<h2>Members ("

$offsetTxt = 13

$strLen = 1

memberTxt $ringName $htmlContent $findTxt $offsetTxt $strLen

$ringName = "webring-5"

$htmlContent = Invoke-RestMethod "url-to-webring-5"

$findTxt1 = "//the full URLs of all the sites in the ring"

$findTxt2 = "var siteswwws"

$findTxt3 = "https://"

midContent $ringName $htmlContent $findTxt1 $findTxt2 $findTxt3

$ringName = "webring-6"

$htmlContent = Invoke-RestMethod "url-to-webring-5"

$findTxt1 = "//the name of the ring"

$findTxt2 = "'https://"

startContent $ringName $htmlContent $findTxt1 $findTxt2

$ringName = "webring-7"

$htmlContent = Invoke-RestMethod "url-to-webring-7"

$findTxt = "id=`"site_"

textCount $ringName $htmlContent $findTxt

$ringList | out-file "$PSScriptRoot\webring-members.csv"Sources and Resources

About Regular Expressions - Microsoft Learn

About special characters - Microsoft Learn

Dead Internet Theory - Wikipedia

How do I prevent site scraping? - Stack Overflow

How to Do Web Scraping With PowerShell - ZenRows

How to use regular expression in PowerShell - PDQ

Invoke-RestMethod - Microsoft Learn

Invoke-RestMethod - PDQ

Invoke-WebRequest - Microsoft Learn

Invoke-WebRequest - PDQ

New-TimeSpan - Microsoft Learn

New-TimeSpan - SS64

Web Scraping With PowerShell - Oxylabs