- Site Home

- Web Stuff Home

- Accessing server on LAN

- Controlling Apache

- Securing Apache

- Apache Server Status

- Updating Apache

- Cable Modems

- Domain Transfers

- Google Search Console

- Redirection

- Referer Spam

- Router Settings

- SAX1V1S WiFi 6 Router

- Enabling SSL

- Renewing SSL

- Utilities

- Webmaster Spam

- Web Log Files

- Splitting Logs

- Analyzers List

- Analog

- AWStats

- Log Parser

- Statistics comparison

- W3Perl

- Webalizer

- Linux Web Server

- 2003 Windows Web Server

- Windows Web Server 2020

- Alternative Search Engines

- Browsers

- Browser Compliance

- Full web page capture

- Close blocked exit web page

- Wayback Machine

- ISU Web Presence

- Installing Perl

- Perl Editing

- HTTP Requests

- Web Scraper

- Website Basics

- Web Tips & Tricks

- Code Tips

- Design

- Web Templates

- MIDI Files

- <details> Tag

- Pseudo Target Menu

- Forms

- Frames

- Image Optimization

- Image Q & A

- Responsive Image Maps

- Linkrot

- Links

- Linking Web Files

- Lycos UK

- Site Search Styling

- Video

- Responsive Video Embedding

- Random

- Serial Listings

- Speed Tips

- Toggle Variables

- Parse URL

- Qualtrics Date Picker

- Webring History

- Webring List

- Webring Statistics

- Webring Technology

- Indie Web Directories

- Latest Updates

- Sitemap

- Webrings

- Contact

Windows/Apache Web Server

Securing Apache

Introduction

This is not a full discussion of securing or hardening an Apache web server, there are people far more qualified and knowledgeable than me to do that. Instead, this is what I did and why.

The "Server in the Cellar" is very much a standalone machine, and all it contains are the server software and the website files. It doesn't contain anything else and doesn't know about any other computer I might have. The log files show that there have been attempts to break out of the web folders or try and find if I'm running CGI or other scripts ever since I turned it on in 2003. I suppose these are mostly bots doing the work and that I couldn't stop a really determined attack, but it would be a mean-spirited person to disrupt it.

Hardening the server not only protects the website but also its visitors.

The standard security employed by Apache has been good enough since I started using it. I did not get around to installing SSL certificates and HTTPS until October 2022, and that started my interest in hardening the installation a little.

Two good, as far as I can tell anyway, sources for information are Geekflair and of course the Apache security documentation.

Site Testing

Server - As I run my own Apache web server, the "Server in the Cellar", I'm interested in tools that can help optimize and protect it. Some of the better ones I've found are Detectify, this company once offered free scans for a single site. I started using it and they still scan it, even though the offer was withdrawn years ago. Ionos Website Checker, Mozilla Observatory, and Pentest-Tools Website Vulnerability Scanner are also useful.

As the security for the site is improved then better scores are obtained from these site testers. For example Mozilla Observatory score on October 23, 2022 was F; on November 20, 2022 it was B-, on November 26, 2022 it was B. The score on Pentest-Tools Website Vulnerability Scanner has gone from High to Low in the same period. Detectify reported 19 vulnerabilities with a threat score of 5.8 on October 28, 2022 and as of November 12, 2022, that is now 7 vulnerabilities with a threat score of 4.8.

The "Server in the Cellar" has been running since 2003 and as far as I know, has never come under a serious attack. It is probably too small, and contains nothing of much value to anyone else, to be much of a target but it is an interesting learning experience implementing these recommendations and testing them.

SSL Certificates - I use Let's Encrypt to supply the SSL Certificates and the have a range of Automated Certificate Management Environment (ACME) clients to manage the certificates. Qualys SSL Labs can check the certificates on the website as can Ionos SSL Certificate Checker.

Server and Site Information

Even allowing people to see any information about the server is considered a security risk. The "Server in the Cellar" has public server log beautifier results. The nuisance about that is that is that the referrer links are often spammed or poisoned. Directory listing in Apache is also considered a vulnerability, but I allow it in some folders, mostly for my own convenience. In Apache, I also enabled the mod_status module and made the results of that public. The web page created by mod_status shows what the server is doing at the moment, as well as the uptime, the server version and what the worker threads are doing.

The people who say that exposing any server or site information is a security risk are not being paranoid, the information can be inspected to show exactly what services, scripts etc. are running which can help an attacker. Because of this, some decision must be made as to whether anything about the site should be made public.

Common Vulnerabilities

It must be said that there is some disagreement as to whether everything listed as a vulnerability by some scans actually are. There are others listed by the scans that really should be addressed.

In November 2022, I read the information on Geekflair and the Apache security tips, then ran some of the tools listed above to see what else I could and should be doing. I suppose this list is a vulnerability in itself, but here's what I need to look at:

Content Security Policy (CSP)

Cookies

Cross-Site Scripting (XSS and X-XSS)

Cross-Site Tracing (XST)

Mixed Content (HTTPS vs HTTP)

Permissions Policy

Referrer Policy

Security.txt file

Strict Transport Security (HSTS)

Trace request

X-Content Type Options

X-Frame Options

Content Security Policy (CSP)

The Content Security Policy (CSP) is set of directives that help to detect and mitigate certain types of attacks, including Cross-Site Scripting (XSS) and data injection attacks. These attacks are used for everything from data theft, to site defacement, to malware distribution.

Introductions to CSP include CSP Cheat Sheet, CSP With Google, and MDM Web Docs. While details about the available directives can be found at Content Security Policy (CSP) Quick Reference Guide, and MDM Web Docs

CSP has an option where violations can be written to a local file. I had a very difficult time understanding some of the documentation, especially the endpoints for reporting in the report-uri and the newer report-to directives. Michal Špaček's site, Reporting API Demos helped me no end as to what I was supposed to be doing. Reports can also be sent to Report URI which has a free option, but I wanted to see if I could process the reports myself.

When writing my CSP what worried me most was that I had already written lots of pages and I would set the policy so strictly some of the pages would lose their functionality. Luckily I have not used many outside sources so the list of those I had to provide for was fairly small. I suppose if you really tried, you could set the security policies so strictly, no one could view the sites at all!

In order for CSP directives to work the Apache mod_headers module must be enabled. My main Apache configuration file, httpd.conf, lists all the available modules with the ones not being used commented out with a # in front of them. I found the entry:

#LoadModule headers_module modules/mod_headers.so

and removed the #. If it was not there at all, I would simply write the line in. This simple means the mod_headers module loads when the Apache service is restarted.

In the Virtual Hosts configuration file, httpd-vhosts.conf, for each of the HTTPS port 443 sections (not the HTTP port 80 ones). The CSP directives I use are:

Header set Content-Security-Policy "frame-ancestors 'self'; upgrade-insecure-requests; report-uri /cgi-bin/csp-error.pl"

There are several tools available to check the validity of a website's Content Security Policy (CSP) among them are Content Security Policy (CSP) Validator, CSP Evaluator, Csper Policy Evaluator, Hardenize, Mozilla Observatory, Pentest-Tools Website Vulnerability Scanner, and Security Headers.

Cookies

I do not use my own cookies on this site, but utilities I make use of such as Google Analytics, YouTube and Microsoft's Clarify do. While writing this page something changed in the developer mode for Chrome, Edge and Firefox. The warnings given are all similar:

Indicate whether to send a cookie in a cross-site request by specifying its SameSite attribute. Because a cookie’s SameSite attribute was not set or is invalid, it defaults to SameSite=Lax, which prevents the cookie from being sent in a cross-site request. This behavior protects user data from accidentally leaking to third parties and cross-site request forgery. Resolve this issue by updating the attributes of the cookie: Specify SameSite=None and Secure if the cookie should be sent in cross-site requests. This enables third-party use. Specify SameSite=Strict or SameSite=Lax if the cookie should not be sent in cross-site requests.

After reading sites such as Chrome's Changes Could Break Your App, Set-Cookie, Using HTTP cookies, and more, I couldn't find any header setting that could remove the warnings from the browsers. It might even be something to do with the cookies themselves, in which case there is nothing I can do.

Cross-Site Scripting (XSS and X-XSS)

Cross-site scripting works by manipulating a vulnerable web site so that it returns malicious script to users. This usually affects websites that have some sort of interaction with a visitor such as a form, guestbook, comments section or similar. A malicious user could inject malicious script into the text which is then returned to legitimate visitors. Worse still, the script could be injected into the server itself which means every visitor could be vulnerable to an attack.

Best practices for websites to guard against propagating XSS attacks include sanitizing everything submitted to a website's forms, guestbook etc. so there is nothing executable in them. A properly crafted Content Security Policy (CSP) will help as will looking through the server logs. Cross-site scripting is so widespread and dangerous there are lots of sites that explain it. There is one site that I found particularly interesting. It is from Acunetix by Invicti and shows them tracing a SQL Injection Attack through a server's log files.

There was another policy that could have been implemented and that X-XSS. MDN Web Docs and OWASP recommend that this is no longer used as it could create further vulnerabilities.

This has not been set this on my server.

Cross-Site Tracing (XST) and Trace Requests

This appears to be one on the information vulnerabilities based on what someone can find out about a server. In 2003, Jeremiah Grossman wrote a paper outlining a risk allowing an attacker to steal information including cookies, and possibly website credentials using HTTP Trace. Even when the paper was published, ApacheWeek said that "Although the particular attack highlighted made use of the TRACE functionality to grab authentication details, this isn't a vulnerability in TRACE, or in the Apache web server. The same browser functionality that permits the published attack can be used for different attacks even if TRACE is disabled on the remote web server."

In 2022, most browsers do not use TRACE, none of the common browsers do, and it has been disabled in JavaScript. Apache in their TraceEnable Directive documentation that "Despite claims to the contrary, enabling the TRACE method does not expose any security vulnerability in Apache httpd. The TRACE method is defined by the HTTP/1.1 specification and implementations are expected to support it."

I have not turned this off on the server.

Mixed Content (HTTPS vs HTTP)

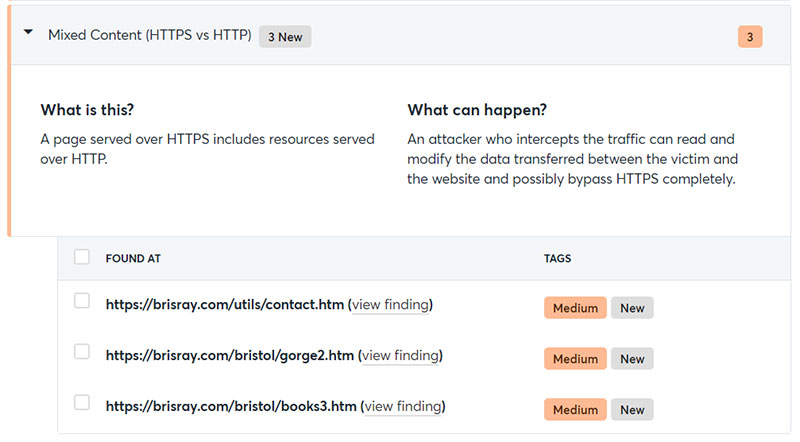

The website checker Detectify once offered free checks for personal, non-commercial sites. I took advantage of that and was grandfathered in when they no longer offered it. Shortly after I added the SSL certificate they did a scan of the site and something new turned up in the report they make. Three of my pages came up with the warning that there were files being served as Mixed Content (HTTPS vs HTTP). Looking at the report it said that the three pages were displaying HTTP content through the HTTPS connection. What was happening?

Mixed Content (HTTPS vs HTTP) warning from Detectify

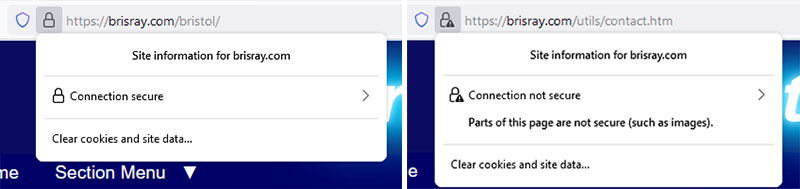

Chrome, my usual browser had the usual secured padlock, so I tried the site Edge, Firefox, and Opera. The browser that showed any sign that something was amiss was Firefox and that showed a small warning triangle by the padlock.

Firefox padlocks: secure and with a warning

Why was this HTTP content still displaying and why didn't the browsers seem to treat it very seriously?

I opened the developer section of the browsers by pressing F12, and they all seemed to say the same thing:

Mixed Content: The page was loaded over HTTPS, but requested an insecure element. This request was automatically upgraded to HTTPS, For more information...

I took a look the pages and sure enough there were HTTP links in them. The browsers had automatically changed those to HTTPS requests and downloaded the content. They were only images, but would they have issued a stronger warning or not download the content at all if a HTTPS connection was not available or if the link was to a different file type?

As there were no warnings given by most browsers, I looked around to see if there was some sort of online checker. There are sites that will check individual pages for HTTP vs HTTPS content, but there are others that will attempt to crawl an entire site. Two of the best I have found are JitBit SSL Check and Missing Padlock - SSL Checker. HTTPS Checker has a free downloadable checker. It works very well but the free edition is limited to 500 pages, this is OK for my sites.

These tools are very useful for finding mixed content, and especially useful for finding pages that now have content completely blocked by my sites' Content Security Policy (CSP) rules. Some pages that were flagged by these tools was found to be missing content from places like YouTube where I had used HTTP instead of HTTPS to call it. The browser developer tools gave the following error:

Mixed Content: The page was loaded over HTTPS, but requested an insecure plugin resource. This request has been blocked; the content must be served over HTTPS.

Needless to say, the pages highlighted by Detectify and the other tools are being checked and the content links upgraded. Some of the tools do not find ordinary links to HTTP sites but others do. Where I have found them, I have changed them to HTTPS but some sites have not enabled SSL and so those links have to remain as HTTP.

Permissions Policy

The Permission Policy is a set of rules in the web page header that controls which features of a browser or its extensions are allowed to act on the page. Some of the policies seem arcane, but as post on StackExchange says:

Your browser contains a lot of features. You as a website operator know which features you do not use. Normally, these features would never be activated since you did not implement them. But in case of a different flaw, an XSS vulnerability for instance, certain features might get activated.

As a second line of defence, the Permissions-Policy header could be used to disable specific features entirely, meaning that while the XSS vulnerability remains, the attacker would not be able to enable these features.

Permissions Policy was previously called Feature Policy until 2020, and this is how MDN Web Docs refer to it as. Chrome Developers is another page worth looking at to understand the various directives.

Referrer Policy

When someone clicks on a link on a webpage one piece of information that is sent is the page the visitor clicked on to get to the new page. What is sent is controlled by the Referrer Policy. This is one of the easier directives to understand and there are several sites that explain it very well such as Geeks for Geeks, Mozilla's MDN Web Docs, and Google's Web Dev

After reading these and others, the following line as added to this site's httpd.conf file:

Header always set Referrer-Policy strict-origin-when-cross-origin

After adding this line, pages my own site get the referrer mysite.com/mypage.htm; other HTTPS sites get mysite.com/ and HTTP sites don't get any referrer information.

target="_blank" - There is a scenario written about by Alex Yumashev on The most underestimated vulnerability ever where if target="_blank" is used on a site to open a new page in a new browser tab, then the linked-to page can take over the original page's tab. Although most browsers now automatically add rel="noopener" to the request, where I use target="_blank", I always add rel="noopener noreferrer" to the link.

Referrer vs Referer - The confusion over the spelling dates back to 1995, when computer scientists Phillip Hallam-Baker and Roy Fielding wrote the Request for Comments for the new protocol. Some instances in web pages now have to use referer as in the referer header field, others have to use referrer as in Referrer-Policy and rel="noopener noreferrer".

Security.txt File

This is an interesting concept, give people who find a security hole in your setup the email address of someone they could report the problem to, as well as some other information. Michal Špaček writes why organizations should have a Security.txt file and what should be in it on his site. Hacker News has an article about why an organization had one then removed it.

I am sysop, back and front end developer, content provider and dogsbody for my server and sites. I get lots of emails from people saying they can redesign my site for me, improve the SEO, and people will to write guest articles for me, all at "competitive rates". No one has ever contacted me about the security of the site so a Security.txt file is not something I am particularly bothered about.

Strict Transport Security (HSTS)

HTTP Strict Transport Security (HSTS) is a web security policy mechanism used for securing HTTPS websites against downgrade attacks. Although a user may initially access the site over a HTTP connection, HSTS informs browsers that the site should only be accessed using HTTPS, and that any future attempts to access it using HTTP should automatically be converted to HTTPS. This is more secure than simply configuring a HTTP to HTTPS (301) redirect on your server, which I have also done, where the initial HTTP connection is still vulnerable to a man-in-the-middle attack.

The Open Web Application Security Project (OWASP) identifies other threats that HSTS protects against:

User bookmarks or manually types http://example.com and is subject to a man-in-the-middle attacker

HSTS automatically redirects HTTP requests to HTTPS for the target domain

Web application that is intended to be purely HTTPS inadvertently contains HTTP links or serves content over HTTP

HSTS automatically redirects HTTP requests to HTTPS for the target domain

A man-in-the-middle attacker attempts to intercept traffic from a victim user using an invalid certificate and hopes the user will accept the bad certificate

HSTS does not allow a user to override the invalid certificate message

Implementing this weighs heavily in most site tests and should be done if SSL Certificates are used.

In the Virtual Hosts configuration file, httpd-vhosts.conf, for each of the HTTPS port 443 sections (not the HTTP port 80 ones), the following line was added:

Header always set Strict-Transport-Security "max-age=63072000; includeSubDomains"

max-age in the above line is set to 63072000 seconds, which is two years.

X-Content Type Options

This directive stops browsers from trying to guess the Multipurpose Internet Mail Extensions (MIME) type of a file. Suppose someone uploads a file to your website as an image file, but it is actually a JavaScript file. The nosniff option of X-Content Type prevents a visitor's browser from examining the file to discover that it is actually an executable file and running it. I added the following line to httpd.conf to turn MIME type sniff off.

Header set X-Content-Type-Options "nosniff"

X-Frame-Options - Iframes and Clickjacking

The threat caused by someone putting a site in their iframe is caused because they could overlay the iframe with a hidden one, so clicking on what visitors think is a link on your site does something else entirely. Sites such as PortSwigger explain the threat, also known as UI Redressing, and what to do about it.

To help protect users from this, I added the lines

Header always set X-Frame-Options "sameorigin"

Header set Content-Security-Policy "frame-ancestors 'self'"

to the Apache httpd.conf file.

I allowed "self" and "sameorigin" as some of my older pages use iframes for menus, demonstrations and so on.

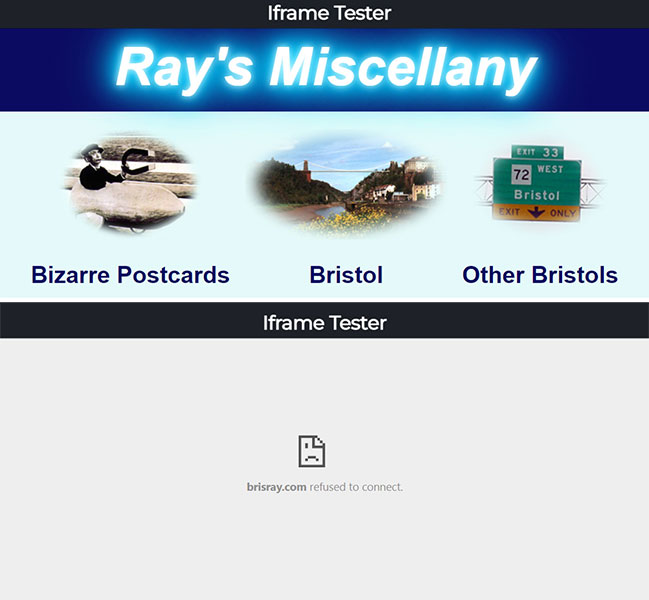

To test the effect these lines were having I used IframeTester, W3Schools iframe, and GeekFlare X-Frame-Options Header Test.

I also looked what header information was being sent from my pages using 4Tools HTTP Headers, Security Headers, which also performs some other tests, and Web Sniffer

Iframe Tester results: Before and after

My Security Headers and Policies

I have some sites that cannot use SSL Certificates and so not available using HTTPS. This is because of the way that they were set up many years ago. Therefore the policies in the vhosts configuration file are only set for the HTTPS (port 443) listener sections.

httpd.conf - the policies in the main configurations file are:

Header always set Referrer-Policy strict-origin-when-cross-origin

Header always set X-Content-Type-Options "nosniff"

Header always set X-Frame-Options "sameorigin"

vhosts.conf - the policies in the vhosts configurations file, HTTPS (port 443) listener sections only, are:

Header always set Strict-Transport-Security "max-age=63072000; includeSubDomains"

Header set Content-Security-Policy "frame-ancestors 'self'; upgrade-insecure-requests; report-uri /cgi-bin/csp-error.pl"