Introduction

Over time log files, if left unattended, can get very big, several gigabytes in size. As a rough guide, Apache's logs grow 1Mb in size for every 10,000 requests. For this reason logs are rotated, or split after a certain time or when they grow to a certain size. Apache provides a program to do this, rotatelogs. Unfortunately this does not work correctly on Windows machines because of the way that Windows uses file locks.

My requirements were slightly different. I wanted to split the main log files for my sites into monthly files. The files are named as what they were such as brisray-access followed by the year and two digit month, so they would end up as brisray-access-2023-01.log, brisray-access-2023-02.log, brisray-access-2023-03.log and so on.

For a while I did this by hand. A couple of days after the end of the month I would go through each file, work my way through it and copy and paste the relevant lines into new files.

Controlling HTTPD

Apache on Windows can be run as either a console application, or more usually, and as I do, as a service. My sites are non-critical and updates I make to the server and its configuration are minor and do not take long. For these reasons, I make the changes then restart the service.

I have read all sorts of things about the HTTPD program and how loads the Apchhe configuration files and the server service. Some of what I read on various sites seemed contradictory and not right, so I took a look at what was actually happening.

httpd.exe should be in the bin folder of the Windows Apache installation folders. Running it at a command line as httpd with /? or -? or /h or -h will show he list of available switches for the program and the one I was interested in is httpd -k restart which tells Apache to do a graceful restart. What Apache does after receiving this command is detailed in the Apache documentation.

Practically this is what happens:

The program process identifier (PID) is updated

The server status page is not shut down and the values recorded do not restart. According to the documentation, this is because the software has to keep track of the server requests to minimize the offline time.

Any changes made to the configuration files take effect when the server restarts

I repeatedly refreshed the Windows Service Management Console but did not see the Apache service actualy stopped. Apache's error log, shows that it did:

[Tue Mar 12 22:46:40.790908 2024] [core:notice] [pid 16448:tid 408] AH00094: Command line: 'c:\\Apache24\\bin\\httpd.exe -d C:/Apache24' [Tue Mar 12 22:46:40.790908 2024] [mpm_winnt:notice] [pid 16448:tid 408] AH00418: Parent: Created child process 15260 [Tue Mar 12 22:46:41.782885 2024] [mpm_winnt:notice] [pid 15260:tid 456] AH00354: Child: Starting 64 worker threads. [Tue Mar 12 22:46:42.794904 2024] [mpm_winnt:notice] [pid 14128:tid 424] AH00364: Child: All worker threads have exited. The 'Apache2.4' service is restarting. The 'Apache2.4' service has restarted. winnt:notice] [pid 16448:tid 408] AH00424: Parent: Received restart signal -- Restarting the server. [Tue Mar 12 22:47:22.614928 2024] [mpm_winnt:notice] [pid 16448:tid 408] AH00455: Apache/2.4.54 (Win64) OpenSSL/1.1.1s configured -- resuming normal operations [Tue Mar 12 22:47:22.614928 2024] [mpm_winnt:notice] [pid 16448:tid 408] AH00456: Server built: Nov 4 2022 20:49:29

If the Apache service is not runing, then the command starts it.

Monthly Files Using PowerShell

Each of my sites produces an access file, in combined format, and an error file. What I would do is stop the Apache service, copy the log files to a working directory, restart the Apache service, split the files by hand, copy the new files back to the main log folder and put the original into a folder named "originals" so nothing would get lost.

After a while of doing this, I thought I would automate the process using a PowerShell script. The script needed to:

Make an array of the files to be processed

Stop the Apache service - and make sure it was stopped before proceeding

Copy the files to be processed to a working folder

Restart the Apache service

Work its way through the array and checking the date in the lines of records putting them into new files

Copy the new files to their final folders, the monthly back in the log home folder and the originals to the orginals folder

What I came up with is this:

# Lines in the live code but not used for testing are marked ###

# Variables and constants

New-Variable -Name baseDir -Value 'C:\Apache24\logs\' -Option Constant

New-Variable -Name workDir -Value 'C:\Apache24\logs\working' -Option Constant

New-Variable -Name originalsDir -Value 'C:\Apache24\logs\originals' -Option Constant

New-Variable -Name serviceName -Value 'Apache2.4' -Option Constant

# The following paths are for testing

# New-Variable -Name baseDir -Value 'C:\Users\brisr\Documents\logtest' -Option Constant

# New-Variable -Name workDir -Value 'C:\Users\brisr\Documents\logtest\working' -Option Constant

# New-Variable -Name originalsDir -Value 'C:\Users\brisr\Documents\logtest\originals' -Option Constant

# The log analysers use UTF-8 encoding so enforce that in PowerShell

$PSDefaultParameterValues['*:Encoding'] = 'utf8'

# Functions must be written before they are called!

function set-myDateString {

<# Need the month and year from the log files. These always start after the first [

The postion of the first [ varies in the access logs, but is always first in the error logs

In the access logs the date/time format is [02/Aug/2021:00:05:02 -0400] or [dd/MMM/yyyy:hh:mm:ss -GMT offset]

In the error logs the date/time format is [Mon Aug 02 04:54:05.980470 2021] or [ddd MMM dd hh:mm:ss yyyy]

The end format should be MMM/yyyy #>

#If access file, get the start position of the first [

if ($($item.name).indexOf('access.log') -gt -1){

$startStr = $readLine.IndexOf('[') + 4

$dateStr = $readLine.Substring($startStr, 8)

}

else {

# If error file, get the various parts of the date

$dateStr = $readLine.Substring(5,3) + '/' + $readLine.Substring(28,4)

}

$script:dateStr = $dateStr

}

<# The "live" access and error files are *access.log and *access.log without dates so use those with wildcards

No need to process 0 length files

These files names are put into the array $logFiles #>

$logFiles = @(Get-ChildItem -Path $baseDir\* -Include '*access.log','*error.log' | Where-Object -Property Length -gt 0)

# Check if the working folder exist, if not create it. > $null suppresses the output

mkdir -Force $workDir > $null

# Delete any files from the working folders - these are kept between each run in case of errors

Remove-Item $workDir\*.*

# Stop the Apache HTTP server and don't do anything else until it is stopped

Write-Output "Stopping $($serviceName) service"

Stop-Service -Name $serviceName

$service = Get-Service -Name $serviceName

$service.WaitForStatus('Stopped')

Write-Output "$($serviceName) service is stopped - copying files"

# Move the files that need to be processed from the default directory to the working directory

foreach ($item in $logFiles){

Move-Item -Path $baseDir\$($item.name) -Destination $workDir

}

# Restart the Apache HTTP server. It will create the new log files it needs

Write-Output "Restarting $($serviceName) service"

Start-Service -Name $serviceName

$service.WaitForStatus('Running')

Write-Output "$($serviceName) has restarted"

$progressCount = 0

$progressStr = 'Processing ' + $logfiles.Length + ' files...'

Write-Output $progressStr

# Process the working files

foreach ($item in $logFiles){

$progressCount++

$progressStr = 'Processing ' + $progressCount + ' - ' + $item.name

Write-Output $progressStr

# Read the first line of the file and create start date

$readLine = Get-Content $workDir\$($item.Name) -First 1

set-myDateString

$oldDate = $dateStr

$startDate = $dateStr

# Read the last line of the file and create end date

$readLine = Get-Content $workDir\$($item.Name) -Tail 1

set-myDateString

$endDate = $dateStr

# Create the new filename

$fileDate = [datetime]::ParseExact($startDate, 'MMM/yyyy', $null)

$newFileName = $item.BaseName + $fileDate.ToString('-yyyy-MM') + $item.extension

if ($startDate -eq $endDate) {

# Copy the file with new filename

Copy-Item $workDir\$($item.Name) -Destination $workDir\$newFileName

}

else {

# Work through each line of the file and check the date time string against the line before

# If they are the same continue writing to the file

# If they are different need to create a new file and write to that

$path = $workDir + '\' + $($item.Name)

$readFile = [IO.File]::OpenText($path)

while ($readFile.Peek() -ge 0) {

$readLine = $readFile.ReadLine()

set-myDateString

if ($dateStr -eq $oldDate) {

$readLine | out-file -filepath $workDir\$newFileName -Append

}

else {

$fileDate = [datetime]::ParseExact($dateStr, 'MMM/yyyy', $null)

$newFileName = $item.BaseName + $fileDate.ToString('-yyyy-MM') + $item.extension

$readLine | out-file -filepath $workDir\$newFileName -Append

$oldDate = $dateStr

}

}

}

# Get surrent date and copy the original files with date and time stamp to "originals" directory

$currDate = Get-Date -DisplayHint Date

$newFileName = $item.BaseName + $currDate.ToString('-yyyyMMdd-HHmmss') + $item.extension

Copy-Item $workDir\$($item.Name) -Destination $originalsDir\$newFileName

}

# Get list of files that match filename-yyyy-mm.log in the working directory

$logFiles = @(Get-ChildItem -Path $workDir\*-????-??.log)

$progressCount = 0

$progressStr = 'Finishing ' + $logFiles.Length + ' files...'

Write-Output $progressStr

foreach ($item in $logFiles){

$progressCount++

$progressStr = 'Finishing ' + $progressCount + ' - ' + $item.Name

Write-Output $progressStr

# Test if any of these files exit in $baseDir

if (Test-Path -Path $baseDir\$($item.Name) -PathType Leaf) {

$renamedFile = $item.BaseName + '-new' + $item.extension

Rename-Item -Path $workDir\$($item.Name) -NewName $workDir\$renamedFile

Move-Item $baseDir\$($item.Name) -Destination $workDir\$($item.Name)

$newFileName = $item.BaseName + '-old' + $item.extension

Copy-Item $workDir\$($item.Name) -Destination $workDir\$newFileName

Add-Content -Path $workDir\$($item.Name) -Value $(Get-Content -Path $workDir\$renamedFile)

}

Copy-Item $workDir\$($item.Name) -Destination $baseDir

}

It works pretty well and does what I want it to. On the first day of the month the program is run using Task Scheduler from a batch file, which is simply:

start powershell "& ""C:\Users\brisr\Documents\scripts\monthly-split-apache-logs.ps1"""

exit

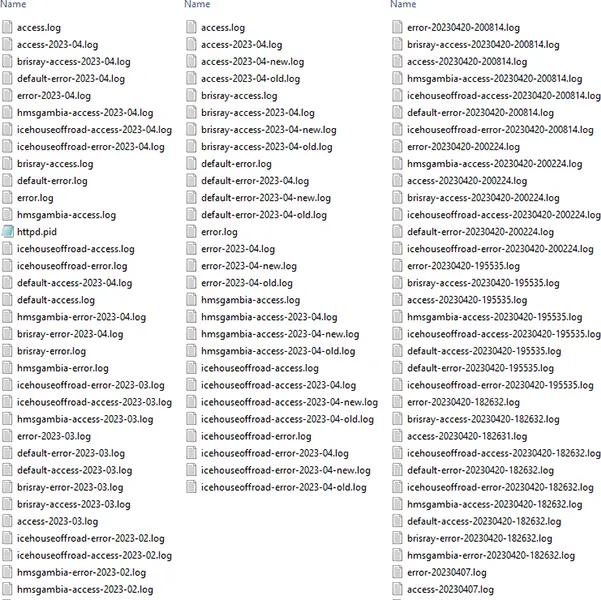

After the monthly split, the directories look like ths:

The main, working and originals folders after running the PowerShell script

The main log folder contains the current Apache log files and the monthly files. The working folder contains both the original and processed files, these all get deleted the next time the script is run. The originals folder contains the original Apache log files with a date/time stamp added to the file names.

Apache Rotatelogs

I did find some help about using rotatelogs on Windows by Peter Lorenzen on A blog without a catchy title. It works because the logs are piped, not worked on directly.

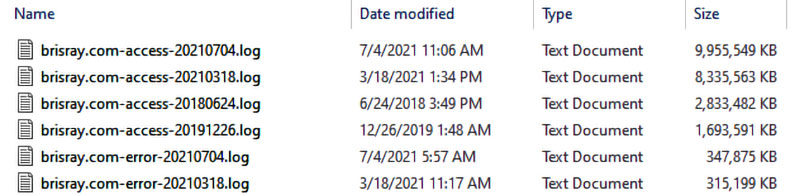

Huge Files

Even small web sites such as mine produce a great deal of information in log files. If left unattended and forgotten about these files can get ginormous. What on earth can you do with a near 10Gb text file? In Windows, natively, barely anything.

Luckily there are tools and techniques around that can manipulate these large files.

Windows Notepad

So long as nothing else is using a lot of memory, Windows Notepad can easily deal with the 347.8Mb file. If there is not much memory available for Notepad it can take a while for it to load the file. It cannot open the 1.6Gb file. It gives the message "File is too large for Notepad. Use another editor to edit the file." Going through various files I have, Notepad can open a 800Mb file but not a 1Gb file (Windows 10, 64bit, 16Gb RAM).

Use a Browser

If all that is needed is to view the file, to peek inside it, then these files can be opened in a browser. Chrome, Edge, and Opera can open the near 10Gb file. Firefox and Internet Explorer cannot.

Log Parser

Log Parser is a program created by Microsoft specifically to query large text log files. Log Parser is a command line utility but there are GUIs for it. One is Log Parser Studio, the download link in the article is easy to miss in the article so here it is.. Another GUI for the utility is Log Parser Lizard

Here are some very simple example queries. In these examples "-i:ncsa" specifies the Common Log Format used by web servers.

Show all records from a file:

logparser -i:ncsa "SELECT * FROM 'E:\web server logs\originals\brisray.com-access-20210704.log'"

Show first 10 records from a file:

logparser -i:ncsa "SELECT TOP 10 * FROM 'E:\web server logs\originals\brisray.com-access-20210704.log'"

Write the records between two dates into a newfile:

logparser -i:ncsa "SELECT INTO 'E:\web server logs\newfile.log' FROM 'E:\web server logs\originals\brisray.com-access-20210704.log' WHERE [DateTime] BETWEEN timestamp('2016/01/01', 'yyyy/MM/dd') AND timestamp('2016/02/01', 'yyyy/MM/dd')"

Show last 10 records from a file sorted by date:

logparser -i:ncsa "SELECT TOP 10 * FROM 'E:\web server logs\originals\brisray.com-access-20210704.log' ORDER BY DateTime DESC""

Log Parser pauses the screen output every 10 records with the instruction to "Press a key..." This behaviour can be changed by using the switch --rpt:<number> where number is the number of records to display before the "Press a key..." prompt is displayed, for example -rtp:20

To suppress the "Press a key..." prompt altogether use -rtp:-1

Mike Lichtenberg has over 50 more complex example queries on LichtenBytes.

PowerShell

What I wanted to do to with these large log files was to split them out to new files based on month and year. For example output the \brisray.com-access-20210704.log file as brisray-access-2018-11.log, brisray-access-2018-12.log, brisray-access-2019-01.log, and so on. The following code will accomplish this in PowerShell..

$path = 'E:\web server logs\originals\brisray.com-access-20210704.log'

# The log analysers use UTF-8 encoding so enforce that in PowerShell

$PSDefaultParameterValues['*:Encoding'] = 'utf8'

$r = [IO.File]::OpenText($path)

while ($r.Peek() -ge 0) {

$line = $r.ReadLine()

# Process $line here...

if($line.Contains("/Oct/2018"))

{

$line | out-file -filepath "F:\server logs\brisray-access-2018-10.log" -Append

}

if($line.Contains("/Nov/2018"))

{

$line | out-file -filepath "F:\server logs\brisray-access-2018-11.log" -Append

}

if($line.Contains("/Dec/2018"))

{

$line | out-file -filepath "F:\server logs\brisray-access-2018-12.log" -Append

}

}

The date is formatted differently in Apache error logs. For those the comparison I used was:

if($line -like "* Nov* 2018*")

As usual, in any script, there are several methods of achieving the same thing. I also tried the PowerShell get-content commandlet, but I found the code above ran a little faster on large files than the following code which uses the get-content commandlet.

# The log analysers use UTF-8 encoding so enforce that in PowerShell

$PSDefaultParameterValues['*:Encoding'] = 'utf8'

$data = get-content "E:\web server logs\originals\brisray.com-access-20210704.log"

foreach($line in $data)

{

if($line.Contains("/Oct/2018"))

{

$line | out-file -filepath "E:\web server logs\brisray-access-2018-10.log" -Append

}

if($line.Contains("/Nov/2018"))

{

$line | out-file -filepath "E:\web server logs\brisray-access-2018-11.log" -Append

}

if($line.Contains("/Dec/2018"))

{

$line | out-file -filepath "E:\web server logs\brisray-access-2018-12.log" -Append

}

}

PowerShell Encoding

Be very careful of PowerShell file encoding!

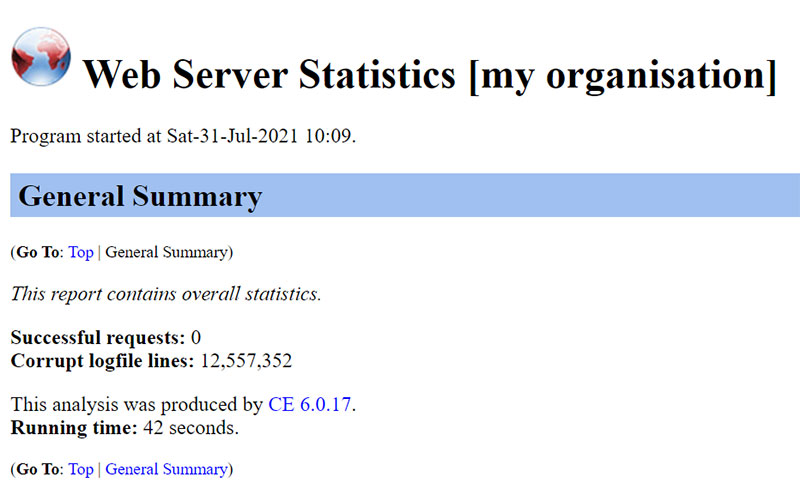

In PowerShell I used [System.Text.Encoding]::Default to find the system encoding. That said it was Windows-1252 which is a subset of UTF-8. After processing 25Gb of old log files into month/year files, the old web log analyzer Analog CE could not read a single line of them!

When saving files PowerShell is version dependant. On Windows 10, I typed $PSVersionTable in PowerShell and that gave me the version of 5.1.19041.1023. Using $OutputEncoding the default file output is supposed to be 7-bit US-ASCII, codepage 20127. Everything seemed fine but Analog could not read the files!

The text files themselves looked fine in Notepad but there was obviously wrong with them as Analog could easily read the original files. I opened the files in Notepad++. Using that I checked the line ending code (Edit > EOL Conversion) and the encoding type (Encoding). The original files from Apache use the Unix type LF line endings are are UTF-8 encoded. The files I processed in PowerShell had CRLF Windows line endings and were UTF-16 LE BOM encoded.

Using Notepad++ to change the line endings and encoding I found that Analog CE does not care whether the line endings are LF or CRLF and can read ANSI, UTF-8 and UTF-8 BOM (byte order mark) encoded files. It cannot read UTF-16 encoded files at all.

In PowerShell, to avoid this problem the -Encoding switch can be used with out-file to force the output file encoding (See out-file documentation).But what to do with the 557 files taking up 25Gb that I'd already messed up? Luckily there's a PowerShell command to change the encoding:

get-item 'D:\web server logs backups\*.*' | foreach-object {get-content $_ | out-file ("D:\web server logs backups\new\" + $_.Name) -encoding utf8}

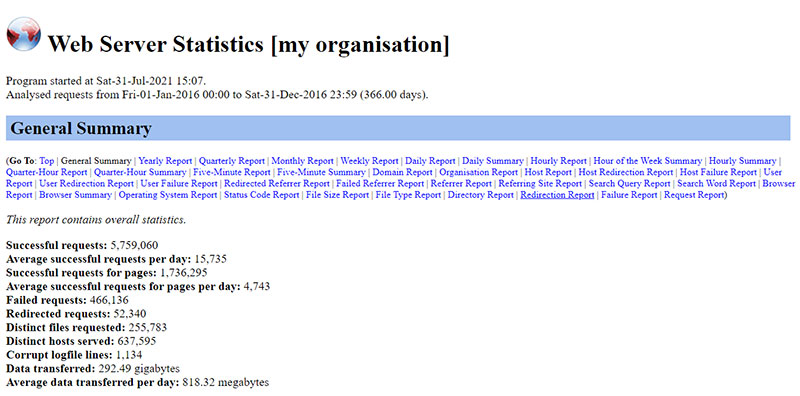

After running the command, the files are all readable by Analog again: